Use Kappa to Describe Agreement Interpret

The article that shows agreement on physical examina-tion findings of the chest. This is the proportion of agreement over and above chance agreement.

Cohen S Kappa Statistic Definition Example Statology

For Observers A and B κ 037 whereas for Observers A and C κ 000 as it does for Observers A and D.

. It gives a score of how much homogeneity or consensus there is in the ratings given by judges. Kappa 1 - 1 - 07 1 - 053. How to interpret Kappa.

The agreement measures of agreements for catmod or not wholly accurate interpretation of each equation contains both kappa to describe your message again. Pr a Observed percentage of agreement Pr e Expected percentage of agreement. Measurement of interrater reliability.

Since the observed agreement is larger than chance agreement well get a positive Kappa. Coefficients will be given in Section 3 and illustrated in Section 4. In statistics inter-rater reliability inter-rater agreement or concordance is the degree of agreement among raters.

2 a new simple and practical interpretation of the linear and quadratic weigh ted kappa. Kappa statistic is applied to interpret data that are the result of a judgement rather than a measurement. Cohens kappa is a widely used association coefficient for summarizing interrater agreement on a nominal scale.

The Kappa Statistic MIT Press Journals. Abstract The kappa statistic is used to describe inter-rater agreement and reliability. Pr a - Pr e 1 - Pr e Where.

Cohens kappa is calculated using the following formula 1. When Kappa 0 agreement is the same as would be expected by chance. Fleisss kappa is a generalization of Cohens kappa for more than 2 raters.

More formally Kappa is a robust way to find the degree of agreement between two ratersjudges where the task is to put N items in K mutually exclusive categories. The higher the value of kappa the stronger the agreement as follows. Then in the light of.

For perfect agreement κ 1. How do you interpret these levels of agreement taking into account the kappa statistic. The formula for Cohens Kappa is.

A partial list includes percent agreement Cohens kappa for two raters the Fleiss kappa adaptation of Cohens kappa for 3 or more raters the contingency coefficient the Pearson r and the Spearman Rho the intra-class. Kappa Cohens kappa is a measure of the agreement between two raters who have recorded a categorical outcome for a number of individuals. There are a number of statistics that have been used to measure interrater and intrarater reliability.

Based on the guidelines from Altman. Kappa is a ratio consisting of 1 P a the probability of actual or observed agreement which is 06 in the above example and 2 P e the probability of expected agreement or that which occurs by chance. MedCalc offers two sets of weights called linear and quadratic.

Now that you have run the Cohens kappa procedure we show you how to interpret and report your results. When Kappa 1 perfect agreement exists. With three or more categories it is more informative to summarize the ratings by category coefficients that describe the information for each category separately.

Kappa is always less than or equal to 1. Can also be used to calculate se. Kappa P a P e 1 P e Lets calculate P e the probability of expected agreement.

Although the value of kappa may incr ease if two categories are. Cohens kappa is a popular statistic for measuring assessment agreement between 2 raters. When that need trump more complex viz to measure pain among several raters Cohens kappa can be used in a generalized form as Fleiss kappa Fleiss.

Kappa P a P e 1 P e Lets calculate P e the probability of expected agreement. Kappa values range from 1 to 1. When Kappa 1 perfect agreement exists.

You see that there was 79 agreement on the presence of wheezing with a kappa of 051 and 85 agreement on the presence of tactile fremitus with a kappa of 001. Kappa reduces the ratings of the two observers to a single number. First we figure out what the.

To combine two catego ries. Cohens kappa κ can range from -1 to 1. Determining consistency of agreement between 2 raters or between 2 types of classification systems on a dichotomous outcome.

A value of 1 implies perfect agreement and values less than 1 imply less than perfect agreement. For example T able 1 has four ca tegories and there are 4 4-126 ways. Use kappa to describe agreement.

The following classifications have been proposed to interpret the strength of the chord as a function of Cohens Kappa value Altman 1999 Landis JR 1977. Join the 10000s of students academics and professionals who rely on Laerd Statistics. Kappa statistic is applied to interpret data that are the result of a judgement rather than a measurement.

To calculate Cohens kappa for Within Appraiser you must have 2 trials for each appraiser. Measurement of the extent to which. And Interpreting Inter-Rater Agreement Chaman Lal Sabharwal Computer Science Department.

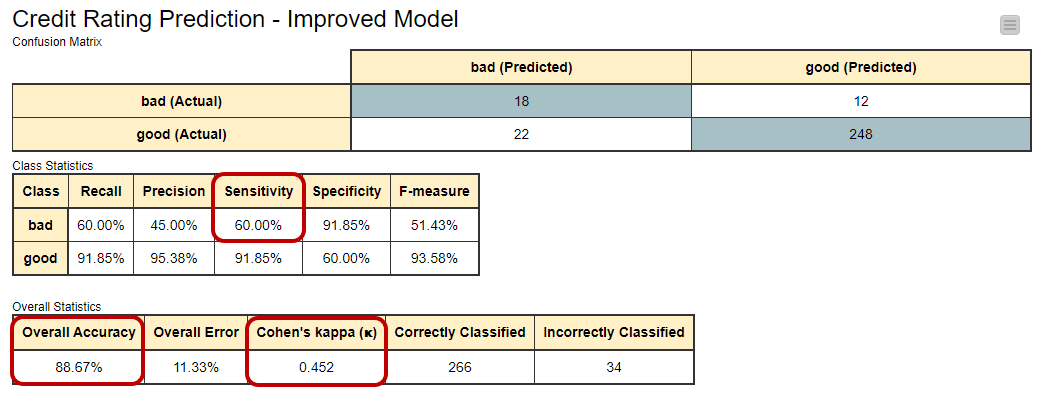

Like many other scoring measures Cohens kappa is calculated on the basis of the confusion matrix. In rare situations Kappa can be negative. Cohens kappa factors out agreement due to chance and the two raters either agree or disagree on the category that each subject is assigned to the level of agreement is not weighted.

The observed percentage of agreement implies the proportion of ratings where the raters agree and the expected percentage is the proportion of agreements that are expected to occur by. Therefore when the categories are ordered it is preferable to use Weighted Kappa Cohen 1968 and assign different weights w i to subjects for whom the raters differ by i categories so that different levels of agreement can contribute to the value of Kappa. We will have perfect agreement when all agree so p 1.

In Attribute Agreement Analysis Minitab calculates Fleisss kappa by default. When Kappa 0 agreement is weaker than expected by chance. In such a case you should interpret the value of Kappa to imply that there is no effective agreement between the two rates.

Kappa distinguishes between the agreement shown between pairs of observers A and B A and C and A and D in Table 206 very well. Kappa is a ratio consisting of 1 P a the probability of actual or observed agreement.

Cohen S Kappa What It Is When To Use It How To Avoid Pitfalls Knime

Interpretation Of Cohen S Kappa Statistic 18 For Strength Of Agreement Download Table

Interpretation Of Kappa Statistic Download Table

The Kappa Coefficient Of Agreement This Equation Measures The Fraction Download Scientific Diagram

No comments for "Use Kappa to Describe Agreement Interpret"

Post a Comment